-

Python Tutorial

- python-tutorial

- python-features

- python-history

- python-applications

- python-install

- python-example

- python-variables

- python-data-types

- python-keywords

- python-literals

- python-operators

- python-comments

- python-if-else

- python-loops

- python-for-loop

- python-while-loop

- python-break

- python-continue

- python-pass

- python-strings

- python-lists

- python-tuples

- python-list-vs-tuple

- python-sets

- python-dictionary

- python-functions

- python-built-in-functions

- python-lambda-functions

- python-files-i/o

- python-modules

- python-exceptions

- python-date

- python-regex

- python-sending-email

- read-csv-file

- write-csv-file

- read-excel-file

- write-excel-file

- python-assert

- python-list-comprehension

- python-collection-module

- python-math-module

- python-os-module

- python-random-module

- python-statistics-module

- python-sys-module

- python-ides

- python-arrays

- command-line-arguments

- python-magic-method

- python-stack-queue

- pyspark-mllib

- python-decorator

- python-generators

- web-scraping-using-python

- python-json

- python-itertools

- python-multiprocessing

Python OOPs

- python-oops-concepts

- python-object-class

- python-constructors

- python-inheritance

- abstraction-in-python

Python MySQL

- environment-setup

- database-connection

- creating-new-database

- creating-tables

- insert-operation

- read-operation

- update-operation

- join-operation

- performing-transactions

Python MongoDB

Python SQLite

Python Questions

- how-to-install-python-in-windows

- how-to-reverse-a-string-in-python

- how-to-read-csv-file-in-python

- how-to-run-python-program

- how-to-take-input-in-python

- how-to-convert-list-to-string-in-python

- how-to-append-element-in-the-list

- how-to-compare-two-lists-in-python

- how-to-convert-int-to-string-in-python

- how-to-create-a-dictionary-in-python

- how-to-create-a-virtual-environment-in-python

- how-to-declare-a-variable-in-python

- how-to-install-matplotlib-in-python

- how-to-install-opencv-in-python

- how-to-print-in-same-line-in-python

- how-to-read-json-file-in-python

- how-to-read-a-text-file-in-python

- how-to-use-for-loop-in-python

- is-python-scripting-language

- how-long-does-it-take-to-learn-python

- how-to-concatenate-two-strings-in-python

- how-to-connect-database-in-python

- how-to-convert-list-to-dictionary-in-python

- how-to-declare-a-global-variable-in-python

- how-to-reverse-a-number-in-python

- what-is-an-object-in-python

- which-is-the-fastest-implementation-of-python

- how-to-clear-python-shell

- how-to-create-a-dataframes-in-python

- how-to-develop-a-game-in-python

- how-to-install-tkinter-in-python

- how-to-plot-a-graph-in-python

- how-to-print-pattern-in-python

- how-to-remove-an-element-from-a-list-in-python

- how-to-round-number-in-python

- how-to-sort-a-dictionary-in-python

- strong-number-in-python

- how-to-convert-text-to-speech-in-python

- bubble-sort-in-python

- logging-in-python

- insertion-sort-in-python

- binary-search-in-python

- linear-search-in-python

- python-vs-scala

- queue-in-python

- stack-in-python

- heap-sort-in-python

- palindrome-program-in-python

- program-of-cumulative-sum-in-python

- merge-sort-in-python

- python-matrix

- python-unit-testing

- forensics-virtualization

- best-books-to-learn-python

- best-books-to-learn-django

- gcd-of-two-number-in-python

- python-program-to-generate-a-random-string

- how-to-one-hot-encode-sequence-data-in-python

- how-to-write-square-root-in-python

- pointer-in-python

- python-2d-array

- python-memory-management

- python-libraries-for-data-visualization

- how-to-call-a-function-in-python

- git-modules-in-python

- top-python-frameworks-for-gaming

- python-audio-modules

- wikipedia-module-in-python

- python-random-randrange()

- permutation-and-combination-in-python

- getopt-module-in-python

- merge-two-dictionaries-in-python

- multithreading-in-python-3

- static-in-python

- how-to-get-the-current-date-in-python

- argparse-in-python

- python-tqdm-module

- caesar-cipher-in-python

- tokenizer-in-python

- how-to-add-two-lists-in-python

- shallow-copy-and-deep-copy-in-python

Python Tkinter (GUI)

- python-tkinter

- tkinter-button

- tkinter-canvas

- tkinter-checkbutton

- tkinter-entry

- tkinter-frame

- tkinter-label

- tkinter-listbox

- tkinter-menubutton

- tkinter-menu

- tkinter-message

- tkinter-radiobutton

- tkinter-scale

- tkinter-scrollbar

- tkinter-text

- tkinter-toplevel

- tkinter-spinbox

- tkinter-panedwindow

- tkinter-labelframe

- tkinter-messagebox

Python Web Blocker

Python MCQ

Related Tutorials

- numpy-tutorial

- django-tutorial

- flask-tutorial

- pandas-tutorial

- pytorch-tutorial

- pygame-tutorial

- matplotlib-tutorial

- opencv-tutorial

- openpyxl-tutorial

- python-cgi

- python-design-pattern

Python Programs

Tokenizer in PythonAs we all know, there is an incredibly huge amount of text data available on the internet. But, most of us may not be familiar with the methods in order to start working with this text data. Moreover, we also know that it is a tricky part to navigate our language's letters in Machine Learning as Machines can recognize the numbers, not the letters. So, how the text data manipulation and cleaning are done to create a model? In order to answer this question, let us explore some wonderful concepts beneath Natural Language Processing (NLP). Solving an NLP problem is a process divided into multiple stages. First of all, we have to clean the unstructured text data before moving to the modeling stage. There are some key steps included in the data cleaning. These steps are as follows:

In the following tutorial, we will be learning a lot more about the very primary step known as Tokenization. We will be understanding what Tokenization is and why it is necessary for Natural Language Processing (NLP). Moreover, we will also be discovering some unique methods to execute Tokenization in Python. Understanding TokenizationTokenization is said to be dividing a large quantity of text into smaller fragments known as Tokens. These fragments or Tokens are pretty useful to find the patterns and are deliberated as the foundation step for stemming and lemmatization. Tokenization also supports in substitution of sensitive data elements with non-sensitive ones. Natural Language Processing (NLP) is utilized to create applications like Text Classification, Sentimental Analysis, Intelligent Chatbot, Language Translation, and many more. Thus, it becomes important to understand the text pattern to achieve the purpose stated above. But for now, consider the stemming and lemmatization as the primary steps for cleaning the text data with the help of Natural Language Processing (NLP). Tasks like Text Classification or Spam Filtering use NLP along with deep learning libraries like Keras and Tensorflow. Understanding the Significance of Tokenization in NLPIn order to understand the significance of Tokenization, let us consider the English Language as an example. Let us pick up any sentence and keep it in mind while understanding the following section. Before processing a Natural Language, we have to identify the words constituting a string of characters. Thus, Tokenization appears out to be the most fundament step to proceed with Natural Language Processing (NLP) This step is necessary as the text's actual meaning could be interpreted by analyzing each word present within the text. Now, let us consider the following string as an example: My name is Jamie Clark. After performing the Tokenization on the above string, we would be getting output as shown below: ['My', 'name', 'is', 'Jamie', 'Clark'] There are various uses for performing the operation. We can utilize the tokenized form in order to:

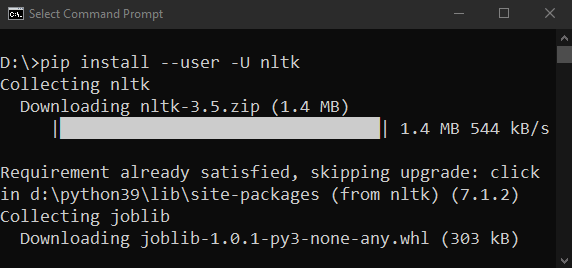

Now, let us understand several ways to perform Tokenization in Natural Language Processing (NLP) in Python. Some Methods to perform Tokenization in PythonThere are various unique methods of performing Tokenization on Textual Data. Some of these unique ways are described below: Tokenization using the split() function in PythonThe split() function is one of the basic methods available in order to split the strings. This function returns a list of strings after splitting the provided string by the particular separator. The split() function breaks a string at each space by default. However, we can specify the separator as per the need. Let us consider the following examples: Example 1.1: Word Tokenization using the split() function Output: ['Let's', 'play', 'a', 'game,', 'Would', 'You', 'Rather!', 'It's', 'simple,', 'you', 'have', 'to', 'pick', 'one', 'or', 'the', 'other.', 'Let's', 'get', 'started.', 'Would', 'you', 'rather', 'try', 'Vanilla', 'Ice', 'Cream', 'or', 'Chocolate', 'one?', 'Would', 'you', 'rather', 'be', 'a', 'bird', 'or', 'a', 'bat?', 'Would', 'you', 'rather', 'explore', 'space', 'or', 'the', 'ocean?', 'Would', 'you', 'rather', 'live', 'on', 'Mars', 'or', 'on', 'the', 'Moon?', 'Would', 'you', 'rather', 'have', 'many', 'good', 'friends', 'or', 'one', 'very', 'best', 'friend?', 'Isn't', 'it', 'easy', 'though?', 'When', 'we', 'have', 'less', 'choices,', 'it's', 'easier', 'to', 'decide.', 'But', 'what', 'if', 'the', 'options', 'would', 'be', 'complicated?', 'I', 'guess,', 'you', 'pretty', 'much', 'not', 'understand', 'my', 'point,', 'neither', 'did', 'I,', 'at', 'first', 'place', 'and', 'that', 'led', 'me', 'to', 'a', 'Bad', 'Decision.'] Explanation: In the above example, we have used the split() method in order to break the paragraph into smaller fragments or say words. Similarly, we can also break the paragraph into sentences by specifying the separator as the parameter for the split() function. As we know, a sentence generally ends with a full stop "."; which means that we can utilize the "." as the separator to split the string. Let us consider the same in the following example: Example 1.2: Sentence Tokenization using the split() function Output: ['Dreams', 'Desires', 'Reality', 'There is a fine line between dream to become a desire and a desire to become a reality but expectations are way far then the reality', 'Nevertheless, we live in a world of mirrors, where we always want to reflect the best of us', 'We all see a dream, a dream of no wonder what; a dream that we want to be accomplished no matter how much efforts it needed but we try.'] Explanation: In the above example, we have used the split() function with the full stop (.) as its parameter in order to break the paragraph at the full stops. A major disadvantage of utilizing the split() function is that the function takes one parameter at a time. Hence, we can only use a separator in order to split the string. Moreover, the split() function does not consider the punctuations as the separate fragment. Tokenization using RegEx (Regular Expressions) in PythonBefore moving onto the next method, let us understand the regular expression in brief. A Regular Expression, also known as RegEx, is a special sequence of characters that allows the users to find or match other strings or string sets with that sequence's help as a pattern. In order to start working with RegEx (Regular Expression), Python provides the library known as re. The re library is one of the pre-installed libraries in Python. Let us consider the following examples based on word tokenization and sentence tokenization using the RegEx method in Python. Example 2.1: Word Tokenization using the RegEx method in Python Output: ['Joseph', 'Arthur', 'was', 'a', 'young', 'businessman', 'He', 'was', 'one', 'of', 'the', 'shareholders', 'at', 'Ryan', 'Cloud', 's', 'Start', 'Up', 'with', 'James', 'Foster', 'and', 'George', 'Wilson', 'The', 'Start', 'Up', 'took', 'its', 'flight', 'in', 'the', 'mid', '90s', 'and', 'became', 'one', 'of', 'the', 'biggest', 'firms', 'in', 'the', 'United', 'States', 'of', 'America', 'The', 'business', 'was', 'expanded', 'in', 'all', 'major', 'sectors', 'of', 'livelihood', 'starting', 'from', 'Personal', 'Care', 'to', 'Transportation', 'by', 'the', 'end', 'of', '2000', 'Joseph', 'was', 'used', 'to', 'be', 'a', 'good', 'friend', 'of', 'Ryan'] Explanation: In the above example, we have imported the re library in order to use its functions. We have then used the findall() function of the re library. This function helps the users to find all the words that match the pattern present in the parameter and stores them in the list. Moreover, the "\w" is used to represent any word character, refers to alphanumeric (includes alphabets, numbers), and underscore (_). "+" indicates any frequency. Thus, we have followed the [\w']+ pattern so that the program should look and find all the alphanumeric characters until it encounters any other one. Now, let's have a look at sentence tokenization with the RegEx method. Example 2.2: Sentence Tokenization using the RegEx method in Python Output: ['The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America', 'The product became so successful among the people that the production was increased', 'Two new plant sites were finalized, and the construction was started', "Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care", 'Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories', 'Many popular magazines were started publishing Critiques about him.'] Explanation: In the above example, we have used the compile() function of the re library with the parameter '[.?!]' and used the split() method to separator the string from the specified separator. As a result, the program splits the sentences as soon as it encounters any of these characters. Tokenization using Natural Language ToolKit in PythonNatural Language ToolKit, also known as NLTK, is a library written in Python. NLTK library is generally used for symbolic and statistical Natural Language Processing and works well with textual data. Natural Language ToolKit (NLTK) is a Third-party Library that can be installed using the following syntax in a command shell or terminal:  In order to verify the installation, one can import the nltk library in a program and execute it as shown below: If the program does not raise an error, then the library has been installed successfully. Otherwise, it is recommended to follow the above installation procedure again and read the official documentation for more details. Natural Language ToolKit (NLTK) has a module named tokenize(). This module is further categorized into two sub-categories: Word Tokenize and Sentence Tokenize

Let us consider some example based on these two methods: Example 3.1: Word Tokenization using the NLTK library in Python Output: ['The', 'Advertisement', 'was', 'telecasted', 'nationwide', ',', 'and', 'the', 'product', 'was', 'sold', 'in', 'around', '30', 'states', 'of', 'America', '.', 'The', 'product', 'became', 'so', 'successful', 'among', 'the', 'people', 'that', 'the', 'production', 'was', 'increased', '.', 'Two', 'new', 'plant', 'sites', 'were', 'finalized', ',', 'and', 'the', 'construction', 'was', 'started', '.', 'Now', ',', 'The', 'Cloud', 'Enterprise', 'became', 'one', 'of', 'America', "'s", 'biggest', 'firms', 'and', 'the', 'mass', 'producer', 'in', 'all', 'major', 'sectors', ',', 'from', 'transportation', 'to', 'personal', 'care', '.', 'Director', 'of', 'The', 'Cloud', 'Enterprise', ',', 'Ryan', 'Cloud', ',', 'was', 'now', 'started', 'getting', 'interviewed', 'over', 'his', 'success', 'stories', '.', 'Many', 'popular', 'magazines', 'were', 'started', 'publishing', 'Critiques', 'about', 'him', '.'] Explanation: In the above program, we have imported the word_tokenize() method from the tokenize module of the NLTK library. Thus, as a result, the method has broken the string into different tokens and stored it in a list. And at last, we have printed the list. Moreover, this method includes the full stops and other punctuation marks as a separate token. Example 3.1: Sentence Tokenization using the NLTK library in Python Output: ['The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America.', 'The product became so successful among the people that the production was increased.', 'Two new plant sites were finalized, and the construction was started.', "Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care.", 'Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories.', 'Many popular magazines were started publishing Critiques about him.'] Explanation: In the above program, we have imported the sent_tokenize() method from the tokenize module of the NLTK library. Thus, as a result, the method has broken the paragraph into different sentences and stored it in a list. And at last, we have printed the list. ConclusionIn the above tutorial, we have discovered the concepts of Tokenization and its role in the overall Natural Language Processing (NLP) pipeline. We have also discussed a few methods of Tokenization (including the word tokenization and sentence tokenization) from a specific text or string in Python. Next TopicHow to add two lists in Python

|